Examining AI Agent Infrastructure Opportunities

Key insights from industry leaders shaping the future of AI Agents

This article had input from leading founders in the AI agent space. Thank you for your time and insights…

Andrew Berman (Director of AI at Zapier), Ardy Naghshineh (CEO of Browse AI), Bella Liu (CEO of Orby), Eric Jing (CEO of Genspark), Jason Warner (CEO of Poolside.ai), Lauren Nemeth (COO of Pinecone), Lin Qiao (Founder and CEO of Fireworks AI), Mike Knoop (CEO and Head of AI of Zapier), Mike Sefanov (Director of Communications at Pinecone), Paul Klein IV (CEO of Browserbase), Philip Rathle (CTO of Neo4J), Rob Cheung (CEO of Substrate), Tod Sacerdoti (President of Pipedream), Zach Koch (CEO of Fixie.ai)

Thank you to Prerit Das for your collaboration and co-authorship on this research.

Introduction

This summer, we developed a perspective on the breakthroughs and potential investment opportunities within AI Agent infrastructure.

Imagine a world empowering humans to focus exclusively on what matters.

A world where the inanimate objects facilitating our lives—phones, cars, apps, emails—are intelligent and aware.

This is the promise of AI; specifically, the agent—an implementation of AI looping the real world into its decision-making.

AI agents perceive their environments, process information, and take actions to achieve predefined goals. They think and do. Imagine telling a personal AI agent (likely Siri) you want to have dinner with your friend Jack. It might first check with his agent to find a mutual time that works, find top restaurants in the area, book the reservation, then add the event to both calendars.

Sophisticated, hyper-aware, multi-step agents are a rarity today. They require a level of finesse, multi-order thinking, wisdom, intuition, and focus that haven’t been unlocked by our current core AI stack.

But time flies in this AI era ;)

The vision of a world empowered by highly-sophisticated agents is becoming clearer. By researching, building, and thoroughly testing agent systems, we constructed a detailed map of the components powering them. We spent the last two months diving deep into the companies of today building our vision of tomorrow.

As we explored the investability of AI agents, an overarching hypothesis emerged:

The efficacy of a horizontal agent is predicated on its ability to intercommunicate, collaborate, and interpret its environment in real-time.

Agents must not only connect with the world, but with other agents, leading us to a lofty secondary research question:

Could a singular widely-adopted AI agent infrastructure tool dominate the market and power all agent interactions?

And if so, how monetizable and investable would it be? A company facilitating interactivity between agents at scale has the potential to be huge. We’ve witnessed this level of domination before. People frequently interact with Stripe ($90B market cap). Ie: Stripe enables a vast network of real-time online payment processing, crucial to FinTech banking, e-commerce, and SaaS. They’re the shovel and the railroad in a frantic gold rush.

To dive deeper into these possibilities, we engaged directly with many leading AI agent infrastructure companies and their leadership. At a high level, their insights revealed five key takeaways shedding light on an agentic future:

Interoperability is the key to value creation.

API integration platforms play a pivotal role in connecting agentic systems.

Real-time data is critical for a real-world agent to make effective decisions.

The world will evolve towards “proactive” agents.

Trust and reliability are the two roadblocks preventing a mass adoption of AI agents.

In the following sections, we'll dive deeper into each of these insights, explore the current state of AI agent technology, and examine the potential investment landscape. We'll conclude by revisiting our initial hypothesis and offering our perspective on the future of AI agent infrastructure.

Interoperability

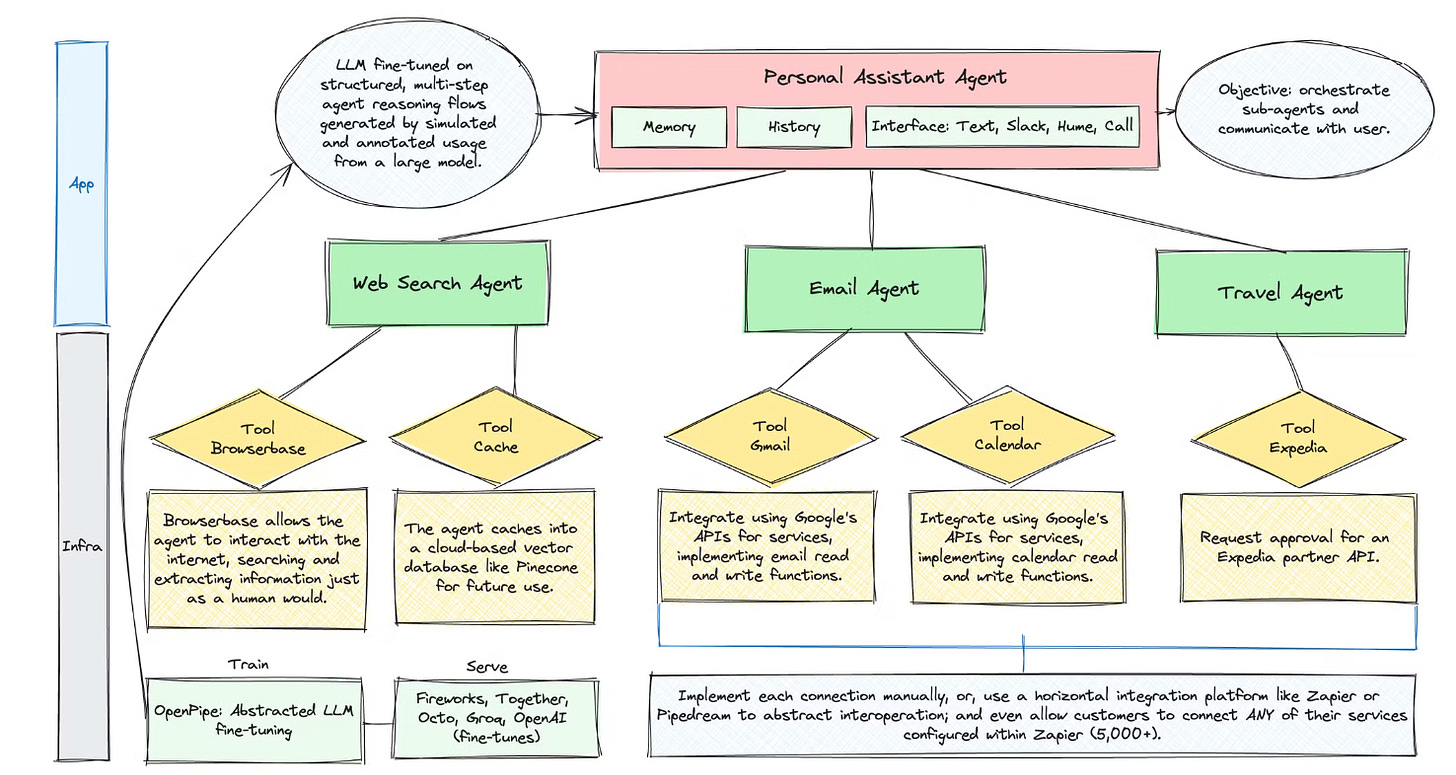

As shown, an AI agent consists of a brain (LLM) and a suite of tools. The brain decides when and how it wants to use a tool, outputting a signal to the software controlling it, which then facilitates the brain's command in the real world.

Consider the above architecture. Your (pink) personal assistant agent has a memory and a history to understand your goals and make informed decisions based on the past.

In this example, it can call on three sub-agents to complete extraneous tasks. Note that in theory, the three sub-agents could be three hundred, dynamically rotating according to the environment.

Each sub-agent has its own tools, defined and interconnected. With hundreds of thousands of commonly-used services, perfect coverage with an agent is near-impossible. This is where interoperability-as-a-service tools shine.

We believe that interoperability is not just a technical necessity, but the primary driver of value creation in agent infrastructure. The CEOs we spoke to unanimously agree; the future of AI agents hinges on seamless communication.

We spoke with Tod Sacerdoti, President of Pipedream (a platform that allows developers to build and run automated workflows connecting APIs, with both code-level control and no-code options). Tod emphasized that "there will be an infrastructure company that connects everything from apps, software, vehicles and devices."

We also interviewed Ardy Naghshineh, CEO of Browse AI (an automation software that allows users to extract and monitor structured data from any website by training robots to perform actions through a simple point-and-click interface). He said, "agents communicating and collaborating with one another seamlessly will multiply the generated value. The critical insight here is the need for an underlying infrastructure that transcends individual applications, enabling a networked ecosystem where AI agents can operate cohesively."

This is a sentiment that we kept hearing over and over.

For this research, we also interviewed Lin Qiao, Founder and CEO of Fireworks AI (a platform that enables fast, scalable AI inference by orchestrating multiple models and tools into efficient compound systems). Lin was previously Head of PyTorch, an open-source deep learning framework that enables rapid prototyping and scaling of machine learning models, crucial for generative AI like GANs. Companies like Meta, Tesla, OpenAI, and Microsoft use PyTorch for AI applications, from computer vision and autonomous driving to natural language processing and generative models like GPT.

Lin told us: "Interoperability is critical for the compound AI systems of the future. Real-world AI results (especially in enterprise production) come from compound systems. These compound systems tackle tasks using various interacting parts. For example: different models, modalities, retrievers, external tools, data and knowledge. Agents in a compound AI system use models and tools to complete individual tasks and solve complex problems as a collective– but they must be interoperable to function."

In our interview with Mike Knoop, CEO of Zapier (a platform for creating automated workflows between apps and services), he said that "as context lengths get longer, and AI systems become more generally intelligent, the product bottlenecks will be more about where you can get access to unique data."

This is the key to the puzzle. With data so highly specialized, personalized, distributed, and fluid, re-centralizing it efficiently and privately in an LLM-parsable format is a Herculean task. Yet, Zapier managed it.

Zapier was seeded in 2011 by Bessemer, Salesforce, and a number of angels. They graduated from YC's 2012 batch and rocketed towards unicorn status. Relevant then, relevant now. Just as Nvidia made a bold bet on GPUs early, which paid off in gaming, crypto, and AI cycles, Zapier made a bet on the interconnectivity of data services.

We believe that companies like Zapier that streamline service connection and interoperability for an AI system single-handedly comprise a layer of the agent stack. Mike told us, "Zapier serves a little over 10M users today. The promise of agents is 10-100x the number of users who can use automation. Natural language and weak forms of AGI probably mean we can serve 100M-1B users."

Another company that caught our attention is Browserbase, which fits into the AI agent ecosystem as a crucial infrastructure layer that enables interoperability by automating complex web tasks and extracting structured data from unstructured sources. Its platform, which combines headless browser technology with AI, allows agents to interact with websites that don't have APIs or are outdated, functioning as the "eyes and ears" of AI systems in web-based interactions. This is particularly important for agents that need to retrieve real-time information or automate tasks on the web. What makes Browserbase fascinating to us is that they allow AI agents to bypass traditional API limitations and access data directly from the web. This complements platforms like Zapier and Pipedream, which focus on integrating APIs for seamless automation, by addressing the limitations where APIs don't exist. Building a web crawler is incredibly challenging, and it's unlikely that every agent-based company will develop their own. Historically, only a few companies, primarily search engines like Google, Bing, and Baidu, or those adjacent to search (such as Amazon and Facebook), have managed to maintain comprehensive, live-updated web crawls. Currently, no web search API provides full access to page content, parsed outlinks, or edit history. This gap presents a significant opportunity for companies like Browserbase, BrowseAI, and others to innovate and fill the void.

Finally, we interviewed Jason Warner, CEO of Poolside.ai (a leading coding agent). He shared, "In the future, we'll have mega intelligence layers in the world - general-purpose ones like OpenAI and Anthropic, and domain-specific ones for areas like software, robotics, and drug discovery. Enterprises and individuals will mix and match these layers. For example, you might use OpenAI for general tasks, pipe programming tasks to Poolside (a software intelligence layer), and use that to control robotic functions. This interconnected ecosystem of AI layers will become the new foundation we build upon, though we're not quite there yet in terms of ubiquity and integration."

APIs and Integrations

The edges connecting nodes in a network of agents are the "how" to interoperability's "what."

Interoperability is the vision of seamless AI collaboration, while API and integration platforms are the practical tools and services that make this vision possible. Companies like Pipedream and Zapier are positioning themselves as crucial intermediaries that will enable the broader goal of AI interoperability.

Both Pipedream and Zapier see their roles as central in providing the necessary integration infrastructure for AI agents. Pipedream aims to be the API and integration infrastructure for AI agents, while Zapier's extensive use in AI automation highlights its critical position in connecting various data sources and applications. Other companies like Make (acquired by Celonis), Mulesoft (owned by Salesforce), and Workato also fit into this space.

The CEO of Browse AI, mentioned earlier, told us: "there are billions of websites on the web with gigantic amounts of valuable, ever-changing information like islands that do not connect or communicate with each other. I noticed that companies are spending millions on developing in-house solutions for connecting tens of data sources to their internal systems."

Browse AI solves a critical problem—reliably extracting structured data from fluid unstructured sources. As humans, we do this daily without thinking. When posed with a research question and armed with the internet, we fill in a few mental key-value pairs after perusing a handful of websites. Teaching a machine to do this at scale is an ordeal, but necessary if the machine is to be trusted to gather real-time information critical to an impending decision.

We did, however, receive a perspective contradicting this thesis. Rob Cheung, CEO of Substrate (a cloud platform optimizing the performance of AI applications), said: "the hypothesis goes too far with how cohesive the infrastructure layer will be. Infra will be important and valuable to do well, but won't take on such a first class connective role because many of the important functions will be modularized and the flexibility that people get by using their own connective layers will be more attractive. Maybe similar to current systems infra ala AWS, etc."

Ultimately, Rob challenges the notion that a single service can empower an agent to play an effective role in a human's life. His thesis, in line with his company's market position, is that a cloud provider one level lower in the stack can facilitate the simultaneous use of many independent integrations, just as software on AWS is not bound to a full stack.

We believe that both realities can coexist. A cloud platform enables integrations, and integrations enable an agent. Value will accrue to the players in each level of the stack who abstract impossible processes and fractionalize expensive ones. Zapier abstracts the impossible process of connecting every major service in the world. Substrate abstracts the process of parallelizing and optimizing nodes in an AI sequence. They're both compatible and valuable in orchestrating an agent.

The infrastructure stack of an agent can be broken down into 5 levels: compute, orchestration, integration, inference, and interaction.

Companies in the compute layer abstract data, server, deployment, and scaling needs. This layer is made of traditional cloud behemoths like AWS, GCP, and Azure, but also emergent startups specializing in AI-focused ease and optimization, like Substrate. The compute layer is expensive, horizontal, and monetizes with usage.

The orchestration layer is made of the frameworks and implementations of the agentic system. Most prominent players in the orchestration layer are open-source—LangChain remains the most popular, with LangGraph specializing in complex agent architectures. Companies in this layer often monetize with additional, paid software tightly integrated with the main open-source counterpart. For example, LangChain sells LangSmith, an observability tool for LangChain and LangGraph, as a service.

We learned a more traditional database company can also be a great orchestration tool. We interviewed Philip Rathle, CTO of Neo4J (the leading graph database company). He said: "As architectures become intricate, we'll see agents interacting with other agents at various levels of granularity, from small tasks to macro-level operations. The challenge lies in defining how these agents should collaborate, share data, and work together. This interaction forms its own graph. A graph database could be a master orchestration point. While an orchestration framework is necessary, a graph database could provide a reliable way to persist and distribute information across a global application, ensuring every agent refers to the same rulebook, even across different companies."

Ultimately, the infrastructure market can be broken down into a few key subsegments, according to that which an agentic system depends upon:

Compute to abstract away concerns of hosting, developing, running, scaling agent software

Infrastructure companies enhance autoscaling, manage marketplaces (e.g., Zapier, Pipedream)

Tool-based infrastructure (e.g., Browse AI, Browserbase) abstracts complex implementations, ex. how Browserbase abstracts web browsing, makes it scalable and beneficial for agents

AI-specific databases to store retrievable unstructured data and encode complex relationships between entities (e.g., GraphRAG)

Interaction mediums to connect agent systems to the real world, ex. chatbots and voice agents

Real-Time Data

A key insight from the infrastructure companies we spoke to is the importance of providing agents with real-time data.

This isn't a straightforward task—agents are powered by LLMs, and LLMs are text-in text-out black boxes.

Real-time data allows an agent to exist in the real world. Imagine having to live a day of your life with all sensory input arriving with a 10-minute delay… Simply unfeasible. As agents are trusted to make increasingly high-stakes decisions, such as the correct transportation to book or the appropriate amount and destination of a fund transfer, the data underneath the decision becomes the key to success.

We spoke with Pinecone, a leading vector database and the first to commercialize what is now known as "RAG." Imagine you could turn every piece of information - whether it's a sentence, an image, or even a sound - into a list of numbers. These numbers represent the "meaning" of that information in a way that computers can understand and compare. That's what vector embeddings do.

So AI agents will need to quickly find relevant information to answer questions or perform tasks. Vector embeddings allow these agents to rapidly search through vast amounts of data to find the most relevant pieces of information. Next, RAG (Retrieval-Augmented Generation), in its simplest terms, is a method that allows AI models to give better responses by first retrieving relevant information from a large database and then using that information to generate more accurate and informed answers.

One of the most exciting aspects of RAG is its ability to work with real-time data. This means AI agents don't have to rely solely on the information they were initially trained on. For example, an AI assistant for a weather service could use this technology to access the latest weather data, allowing it to provide current and accurate forecasts.

We connected with Lauren Nemeth, COO of Pinecone. She also pointed out the following on data freshness: "With consumer agent data constantly changing (you missed a package, called the contact center annoyed, abandoned your shopping cart etc), the data needs to be refreshed in milliseconds. This is a clear area of innovation and expertise at Pinecone".

Pinecone has made this technology more accessible and scalable for businesses. They recently launched a "Serverless" platform, which means companies can use this technology without worrying about managing complex infrastructure. It automatically scales based on usage, and customers only pay for what they use. The impact has been significant. Since launching their serverless architecture earlier this year, we learned that over 35,000 organizations have used it to index more than 35 billion pieces of information (or "embeddings").

Pinecone tells us the primary use cases of this data are AI agent assistants, generic AI search with RAG, and classification (which helps AI systems categorize information). Nemeth said, "Simply put, agents can only be as effective as the context you give them. Pinecone provides real time data from every possible unstructured document to ensure agents have the right context everytime"

Neo4J pioneered GraphRAG, which combines traditional vector-based RAG with knowledge graphs, enabling AI agents to not only retrieve data but also understand and reason about relationships between data points. Graph databases hypothetically allow for better governance and traceability, ensuring AI systems offer not just answers, but clear, auditable reasoning behind decisions, making it unique compared to vector-only RAG systems. He said, "If I just ask an LLM a question without RAG, and it gives me an answer. It is 100% responsible for the answer. Now, if I do GraphRAG and pull back some context, it'll make a better decision because you're giving it better data."

One other company we're excited about is Fixie.ai (a company building AIs that can communicate as naturally as humans). We interviewed Zach Koch, CEO and co-founder. He said, "We're interested in making the interactions with the AI real-time. In the real world, humans get together in groups and discuss ideas rapidly. How do we build models + a stack that enables the AI to participate in this natural, free-flowing world of conversations (while still having access to tools, etc). The future we see is one of AI employees, but collaboration with humans will be critical to that."

Proactivity

So with all of this infrastructure, what use cases will AI agents support? This insight is the most fun.

Our main insight from this discovery process is that AI agents will increasingly be more "proactive." What is "proactivity" in the context of an AI agent?

Can an LLM be proactive? The technical answer is "no"—an LLM is a prediction model, triggered by a request to, well, make a prediction. The practical answer, however, is "yes." In reality, LLMs are orchestrated. There are layers upon layers of deterministic software controlling their operation.

A "proactive" AI system is one that runs iteratively in response to stimuli, ex. upon receipt of an email, upon the beginning of a new day, upon a headline hitting the newswire, etc.

Lin from Fireworks AI echoes our sentiment. She said: "In the future, AI will shift from reactive to proactive systems. Rather than only responding to user inputs, compound AI systems will anticipate user needs and take action on their own. The compound AI systems of the future will automate routine tasks, offer personalized recommendations, integrate multimodal data (text, image, audio, and video) and provide relevant, realtime information in context."

We spoke with Eric Jing, CEO of Genspark (an AI-powered search engine startup that uses specialized AI agents to generate customized pages for each query). He said: "The AI Agent engine is proactive, it can think of better queries, it can perform tasks on user behalf, etc. Users could just talk to "Agent Engine" about what tasks and then wait for tasks to be completed. That would save much user time and a totally different experience to get info or get tasks done." This transformation involves rebuilding the web's underlying data structures to support proactive AI agents.

Paul from Browserbase shared the following with us over email: "Right now, software makes you faster at doing tasks. In the future, software will complete tasks for you. This is the agentic future. People complete much of their tasks using websites and web browsers, so to truly automate the work of white collar knowledge workers, we need a web browser that can be controlled by AI."

I believe the killer use cases won't even require "giving tasks". Agents will evolve from reactive search engines to proactive, intelligent assistants capable of handling complex, multi-step tasks across various domains. A key aspect of this evolution is the development of autonomous AI agents with "goal-seeking capabilities", a trait previously unique to humans. AI agents can learn, find optimal paths, and adjust their strategies independently.

When it comes to AI agents, vertical or single agents will come together to form a "multi-agent system". By leveraging collective intelligence, these systems can tackle complex problems more effectively than single agents. They distribute tasks among specialized agents, dramatically enhancing efficiency and productivity.

One learning we had through this research is that unlike traditional chatbots (like ChatGPT), agents can understand and interact with their environment, making decisions based on context rather than fixed programmed rules. This adaptability allows them to provide personalized assistance at scale, effectively combining large-scale analysis with individualized attention.

In both consumer and enterprise contexts, the integration of proactive AI agents promises to transform daily life and business operations. Here's two scenarios:

For consumers, a network of specialized agents could manage various aspects of personal life seamlessly. From optimizing sleep schedules and health routines to managing finances and travel logistics, these agents work in concert to enhance productivity and well-being. To be more specific, let's say after a quick workout suggested by my Apple Health Agent, which has coordinated with my Nest to adjust the thermostat post-workout, I hop into an Uber booked by my Personal Assistant/Travel Agent, ensuring I'll arrive at my meeting on time.

In the enterprise realm, horizontal AI agents could revolutionize operations across departments, streamlining processes, and enabling human employees to focus on high-value tasks. For example, a Customer Service Agent gathers feedback from early adopters on a new product, while also taking direct actions to issue refunds, or ship out new devices. The customer service agent also identifies common issues affecting multiple customers, so it creates Jira tickets for the product team (who themselves will have coding copilots).

As these systems become more prevalent and intelligent, they will increasingly serve as proactive partners in navigating the complexities of modern life, seamlessly integrating various aspects of our digital and physical worlds to enhance productivity, decision-making, and overall quality of life.

We interviewed Bella Liu, CEO of Orby (an agent that automates complex business processes using generative AI). She said: "Over the next 5 to 10 years, we only see AI agents becoming more prevalent and intelligent along with the foundation models used to inform them. Everyone should be using AI agents to get work done and achieve more."

Trust and Reliability

"We're in inning 1." — Andy Berman, Director of AI at Zapier

Phillip from Neo4J said, "I gave a talk and the way I started my talk was: 'How many of you would trust an LLM to plan your kid's birthday party, like to brainstorm, and so on?' A bunch of hands went up… Next, how many of you would trust an LLM (aka an agent) to hire the magician, decide whom to invite, draft the invitation, send the invitation, order the cake?' And of course, nobody raised their hands" A common thread of insight between all companies we spoke to, reflected in our own experience with agents, is the nascency of LLMs applied to environmentally aware decision-making.

Today, our sentiment is that even the best LLMs simply aren't smart enough to masterfully power multi-agent systems, or wise enough to make appropriate decisions.

Mike from Zapier shared that "agents and LLMs have been sort of like a black box so far – you play with the formatting of your inputs (prompts) and a few other parameters and hope to get accurate results. In reality, this causes major issues for businesses that use this technology in their products: It is extremely rare to get 100% accurate results from these systems, and when the results are not accurate, the user quickly loses confidence in the system and this leads to higher churns and less satisfaction."

In addition to Zapier, Mike recently co-founded The ARC Prize, which is a $1 million+ competition designed to advance progress toward artificial general intelligence (AGI). The goal is to beat Francis Chollet's 2019 ARC-AGI benchmark, which measures a system's ability to acquire new skills efficiently, something that current AI models, including large language models, struggle with.

Rob from Substrate echoed this perspective, applied to the cloud layer of the stack. He said that "Infrastructure for ML/AI is quite different mechanically than infrastructure that was set up for the web, and a lot of the nascent infra popping up to support these flows is trying to shoehorn the new mechanic into the old tooling. ML models are many orders of magnitude larger than a typical web app, the computation for a single request is many many orders of magnitude larger, the elasticity in the machinery is virtually non-existent. All of that means there is real hard systems work that needs to get done before any of this works at any kind of scale."

Zach from Fixie.ai shared with us, "The general thesis of "LLMs + Tools = powerful outcomes", is right, but the current brainpower of the LLMs isn't there yet. In addition, the lack of an actual reasoning or planning step inside the LLM prevents a lot of this from working well or reliably. This is why so many companies get stuck in the migration from prototype → reliable production app."

Model-level limitations restrict AI production deployments to low-risk use cases, limiting its broader application and potential. Solving these trust issues is paramount for AI agents to achieve mainstream adoption. The solution to this problem is likely a combination of deeply-integrated observability tools with error handling and fallbacks, fine-tuning to tightly control the behavior of a small model, and "smarter" foundational LLMs.

One of the callouts in our research is also making sure not to conflate "agents" with retrieval and inference systems.

As we've discovered, there's a nuanced interplay between agents, retrieval systems, and inference mechanisms that impacts these factors. While both agents and retrieval systems can be used to recall information, their approaches differ significantly. Retrieval systems operate deterministically, consistently returning the same text snippets for a given query. In contrast, AI agents use retrieval as a tool, formulating queries based on their understanding of the task, and can refine their approach if initial results are unsatisfactory. This distinction highlights the more sophisticated, albeit potentially slower and more expensive, nature of agent-based systems.

At the core of AI agents are LLMs, which perform the actual inference to generate responses. LLMs are stateless, taking input (including system prompts, conversation history, and tool access) and producing output. The "agent" wrapper around the LLM includes prompts, tools, and orchestration systems that enable more complex behaviors like looping inference and self-reflection. So for questions with exact answers, traditional rule-based or database-driven approaches often provide superior accuracy, transparency, latency, and cost-effectiveness. LLM-based decisions are better suited for more complex, nuanced, or open-ended tasks… but of course, might not be able to be fully trusted today. To address trust and reliability concerns, future developments in AI agent infrastructure will likely focus on enhancing observability and error handling mechanisms, developing more sophisticated fallback systems, improving the integration of deterministic and generative approaches, creating standardized evaluation metrics for agent performance and reliability, and implementing robust governance and ethical frameworks for AI agent deployment.

Conclusion & Emerging Monetization Strategies

Our investigation into the investability of AI agents confirms that the effectiveness of horizontal agents heavily depends on their ability to intercommunicate, collaborate, and interpret their environment in real-time. Interoperability emerges not just as a technical requirement but as a fundamental driver of value creation in agent infrastructure. Companies excelling in seamless integration and real-time data access are at the forefront of empowering agents to function effectively in complex, dynamic environments.

The vision of a singular, universally adopted AI agent infrastructure tool remains a subject of debate. While such a unifying infrastructure could offer significant efficiencies and standardization, the diversity of use cases and the need for flexible, modular solutions suggest that a single tool encompassing all functionalities is unlikely. Instead, the future landscape of AI agents is more likely to be shaped by a collaborative ecosystem of interoperable tools and platforms.

This ecosystem will need to address complexities of real-time data handling, specialized applications, and the varying needs across different sectors. As the AI agent market continues to evolve, we can expect to see dominant players emerge in specific layers of the agent stack, rather than a single tool governing all agent interactions.

As the AI agent ecosystem matures, a diverse array of monetization strategies is emerging, reflecting the multifaceted nature of this rapidly evolving market. Our research has uncovered several approaches that companies are leveraging to capitalize on the growing demand for AI-driven solutions;

Enterprise solutions stand out as a primary avenue for monetization, with companies like Zapier that charge through their AI Actions product. This enterprise-exclusive offering allows businesses to create custom agents using Zapier's API, demonstrating the high value placed on tailored AI solutions in the corporate world. The substantial market capitalization of enterprise AI companies like UiPath ($6.7B) underscores the significant growth potential in this sector.

API and infrastructure pricing is another time-tested critical monetization strategy, mirroring the success of cloud providers like AWS and Azure. With the AI-as-a-Service (AIaaS) market projected to exceed $98 billion by 2030 (source), companies providing the backbone for AI operations (like LangChain or Substrate) are well-positioned to capture substantial recurring revenues. The rapid scaling of AI workloads, particularly in complex applications, positions infrastructure providers for substantial recurring revenues, with valuations in this category reaching billions. As AI agents become more complex and resource-intensive, this could become a significant revenue stream.

Ad-Based revenue models are being explored by companies such as Genspark, drawing inspiration from the success of search engine giants like Google. With the digital advertising market valued at $521 billion in 2023, there's significant potential in this area. I (Jonathan) actually wrote about this in 2017 in my earlier conversational AI research into PASO (Personal Assistant Search Engine). By introducing AI agents that create "closed-loop user journeys" (targeted ads, personalized interactions), companies could capture significant ad revenue.

Finally, transaction-based revenue presents another lucrative path, particularly in e-commerce and travel sectors. This will come into play especially for consumer use cases like we mapped out above. By automating transactions and earning commissions through affiliate partnerships, AI agents could tap into the global e-commerce market, valued at $5.7 trillion in 2023. This model offers scalability and the potential to disrupt traditional online marketplaces.

With all of the above, we're so excited for an agentic future. The integration of AI agents into our daily lives and business operations promises significant advancements. These intelligent systems will streamline processes, enhance our decision-making, and free up human potential for more creative and strategic endeavors which is a future I’m here for.

In the personal sphere, in the simplest ways, AI agents can optimize schedules, manage routine tasks, and provide personalized assistance tailored to my individual needs. In business, they may revolutionize everything from customer service to complex problem-solving, driving innovation and efficiency across industries.

While we're still in the early stages of this tech, we believe the potential for AI agents to augment human capabilities is substantial.